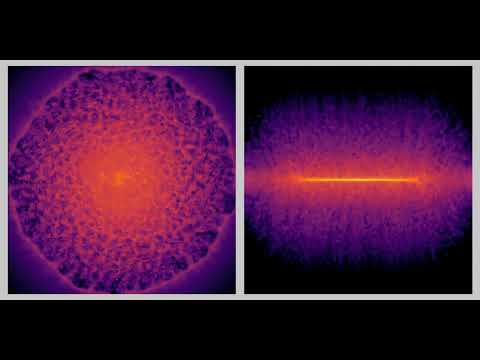

Researchers have achieved a breakthrough in astrophysics by creating the first high-resolution simulation of the Milky Way galaxy, accurately representing over 100 billion individual stars over a period of 10,000 years. This feat was accomplished by integrating artificial intelligence (AI) with conventional numerical simulations, resulting in a model that is both 100 times more detailed and 100 times faster than previous state-of-the-art approaches.

The study, published in Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, marks a significant advance at the intersection of astrophysics, high-performance computing, and AI. Beyond its implications for understanding galactic evolution, this new methodology has the potential to revolutionize modeling in other complex fields, such as climate science and weather forecasting.

The Challenge of Simulating the Milky Way

Astrophysicists have long sought to create realistic simulations of the Milky Way galaxy, down to the level of individual stars. Such models are crucial for testing theories of galactic formation, structure, and stellar evolution against real-world observations. However, accurate galaxy simulations are notoriously difficult due to the vast range of physical processes involved—gravity, fluid dynamics, supernova explosions, and element synthesis—which operate on vastly different scales of space and time.

Until now, scientists have been unable to model large galaxies like the Milky Way while maintaining high star-level resolution. Existing state-of-the-art simulations are limited to an upper mass limit of roughly one billion suns, meaning that the smallest “particle” in the model represents a cluster of 100 stars. This averaging obscures the behavior of individual stars, limiting the accuracy of simulations.

A key bottleneck is the time step required for accurate modeling. Rapid changes at the star level, such as supernova evolution, can only be captured if the simulation progresses in short increments.

Computational Limits and the Need for Innovation

Reducing the time step, however, demands exponentially more computational resources. Even with current technology, simulating the Milky Way down to individual stars would require 315 hours for every million years of simulation time. At this rate, simulating even one billion years of galactic evolution would take over 36 years of real-world time.

Simply adding more supercomputer cores is not a viable solution. Increasing core count does not necessarily translate to faster processing due to diminishing returns in efficiency, and the energy consumption would be unsustainable.

The AI-Powered Breakthrough

To overcome these limitations, Keiya Hirashima at the RIKEN Center for Interdisciplinary Theoretical and Mathematical Sciences (iTHEMS) in Japan, along with colleagues from The University of Tokyo and Universitat de Barcelona in Spain, developed a novel approach. They combined a deep learning surrogate model with physical simulations.

The surrogate model was trained on high-resolution simulations of supernovae and learned to predict the expansion of surrounding gas in the 100,000 years following an explosion, without requiring additional computational resources from the rest of the model. This AI shortcut allowed the simulation to simultaneously model the overall dynamics of the galaxy and fine-scale phenomena like supernovae.

The team verified the simulation’s performance by comparing its output to large-scale tests using RIKEN’s supercomputer Fugaku and The University of Tokyo’s Miyabi Supercomputer System.

Results and Broader Implications

The new method not only enables individual star resolution in large galaxies with over 100 billion stars but also dramatically accelerates simulation speed. Simulating one million years now takes only 2.78 hours, meaning that the desired one billion years could be modeled in just 115 days, rather than 36 years.

Beyond astrophysics, this approach has the potential to transform other multi-scale simulations in fields such as weather, oceanography, and climate science, where linking small-scale and large-scale processes is crucial.

“I believe that integrating AI with high-performance computing marks a fundamental shift in how we tackle multi-scale, multi-physics problems across the computational sciences,” says Hirashima.

This breakthrough demonstrates the power of combining AI and traditional computing to overcome previously insurmountable limitations in scientific modeling. The implications for future research across multiple disciplines are significant